TIMELINE

1 Semester in 2020

ROLE

Designer + Programmer

PURPOSE

Research for Epic Games

INTRODUCTION

VR Architecture Tool

In collaboration with Epic Games (Unreal Engine), this project aimed to transform how architects interact with their tools. While Virtual Reality has long been used in architecture for late-stage presentations, we asked: What if VR became a workspace, not just a showcase tool?

We built a tool that allowed architects to sketch, inspect, and iterate within VR—bringing early-stage design decisions into a 1:1 spatial context. Many architects rely on simulation tools to model behavior—such as pedestrian flow or daylight exposure—but these are often presented through abstract heatmaps or clunky dashboards. We integrated these simulations into the VR space, translating raw data into embodied, intuitive visuals.

This shift in human-computer interaction enabled architects to understand performance through spatial qualities they are used to, not numbers. As a result, design cycles became faster, more confident, and deeply aligned with spatial intuition.

RESEARCH

How are simulations done today?

Architects often view spatial simulation data—like crowd flow or occupancy density—on 2D screens, relying on abstract visuals like heatmaps, charts, or color gradients. These inherently spatial phenomena are reduced to numbers and overlays, requiring designers to interpret and imagine their real-world impact. As a result, they miss the intuitive, embodied understanding of how a space actually feels, leading to less confident or delayed decisions.

RESEARCH

The Interaction Challenge

We began by examining the core assumptions surrounding VR in architectural workflows:

VR communicates space, scale, and materiality far better than a 2D screen.

Designing 3D spaces should feel more natural in a 3D environment.

Modern VR toolkits offer the fidelity needed to model complex structures.

Yet, despite its potential, most architects continue to use it solely for late-stage visualization.

RESEARCH

Understanding Today’s Process

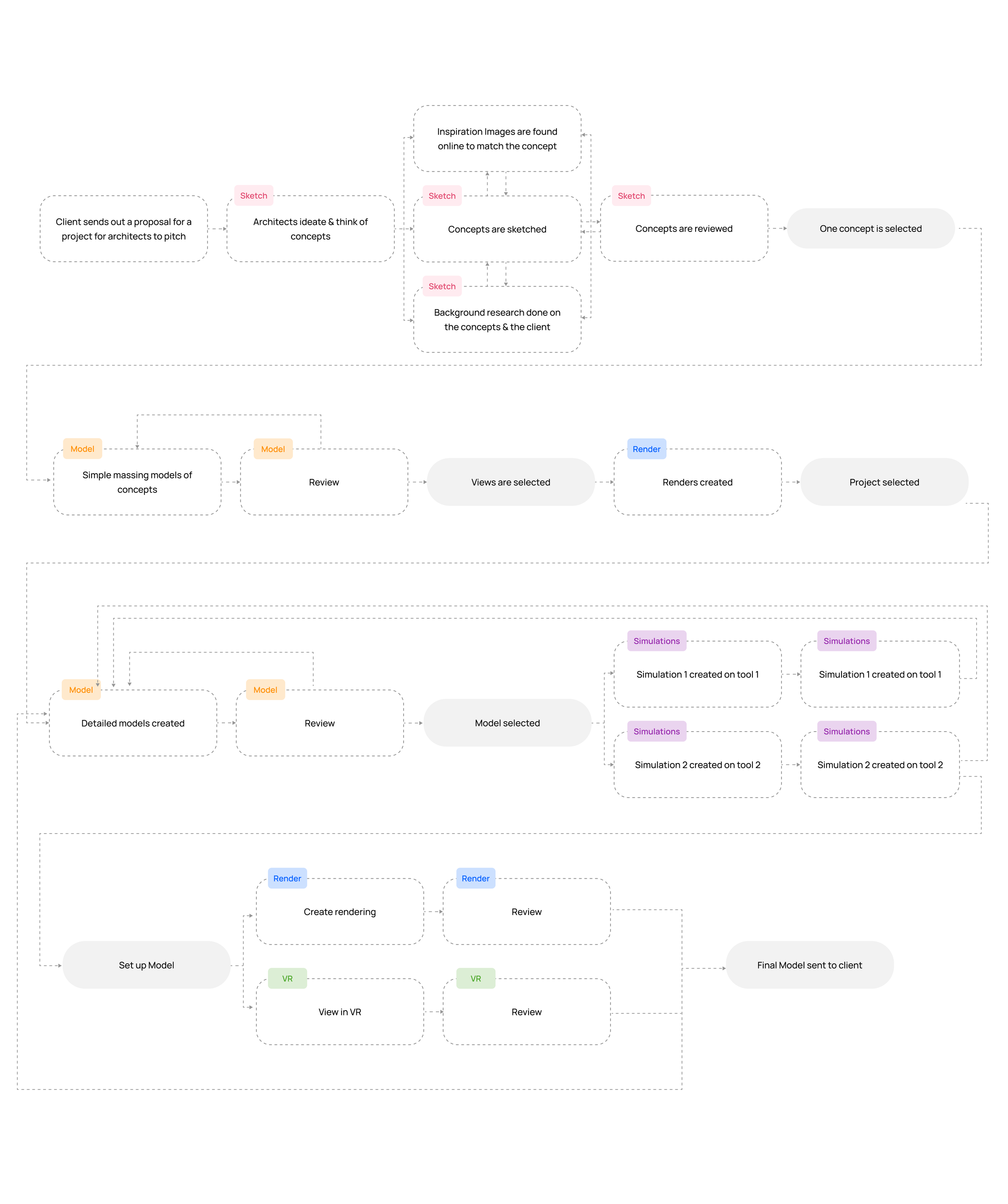

During the process, we wanted to understand every stage of the design process - sketch, model, simulate, render etc.

RESEARCH

Current VR Platforms Used

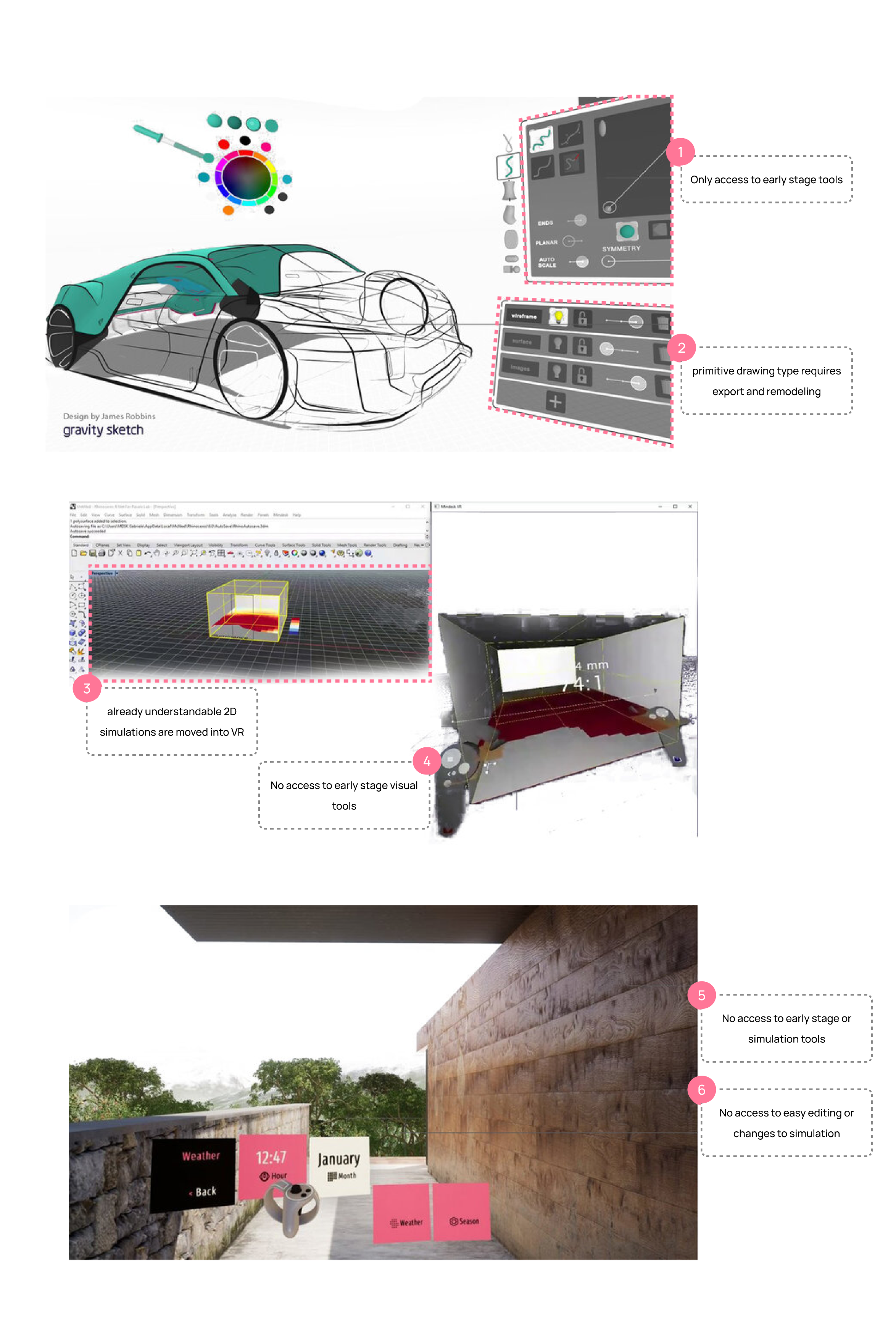

We wanted to understand issues with today’s VR tools. Why can’t they serve an architect’s need?

RESEARCH

User Journey

General Workflow and Pain points:

Extremely long workflow

Too many conversions and exports

Mistakes put you far back

VR Painpoints:

VR editing is difficult with the complex features

Mistakes require re-import and export

Simulations are an important part of the process

ANALYZE

Research Takeaways

Extremely long pipelines make it difficult to iterate quickly

Every stage requires multiple tools and exports

Later stages give you more significantly information, so a mistake can bring you back to the start

The tools are not connected to each other

There is no need to view things in VR that can be understood in 2D

ANALYZE

User Satisfaction & Opportunities

Where in the process are users feeling the most frustrated from the pain points & where the opportunities to build a solution?

ANALYZE

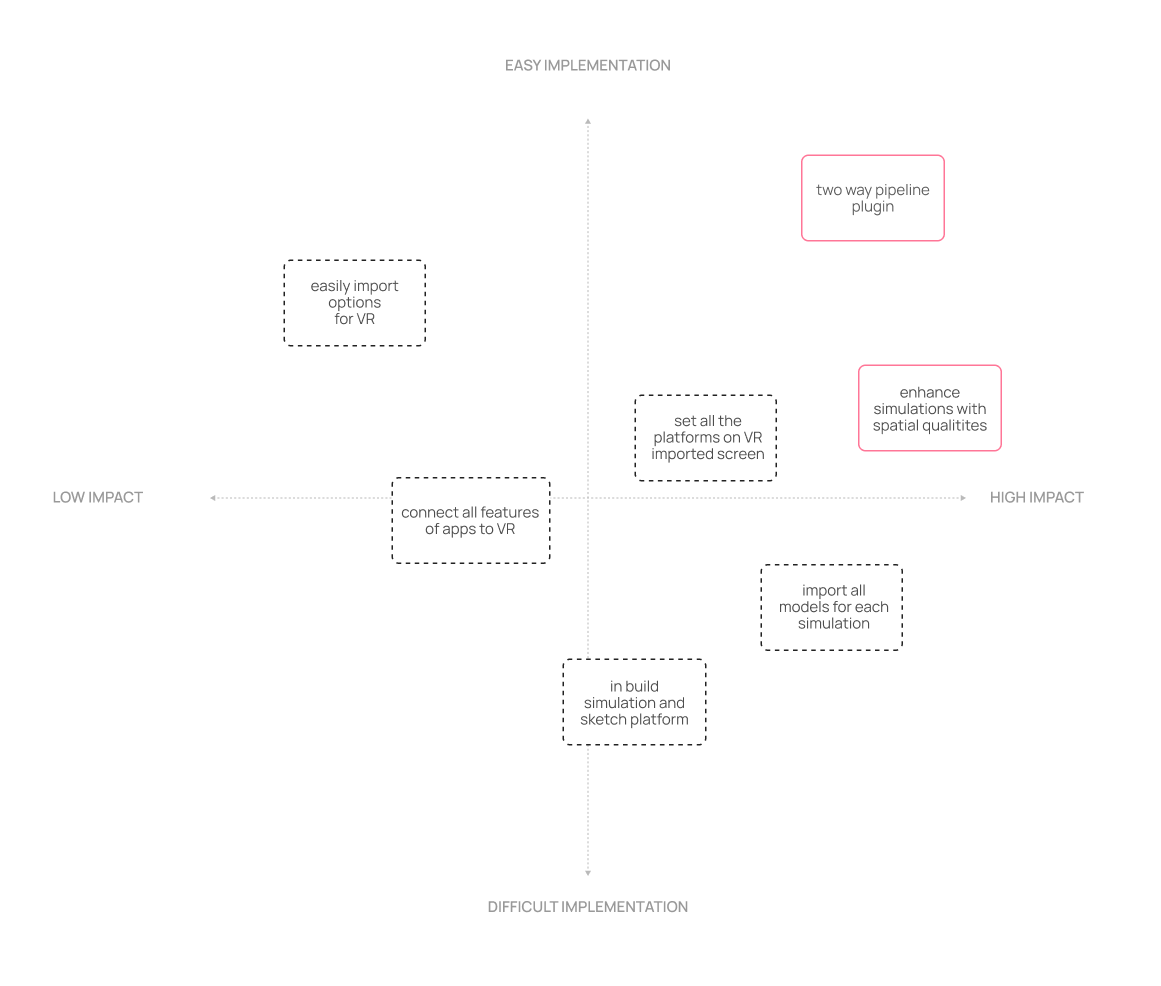

Feasibility Matrix

How feasible are the different options to combine VR and simulations

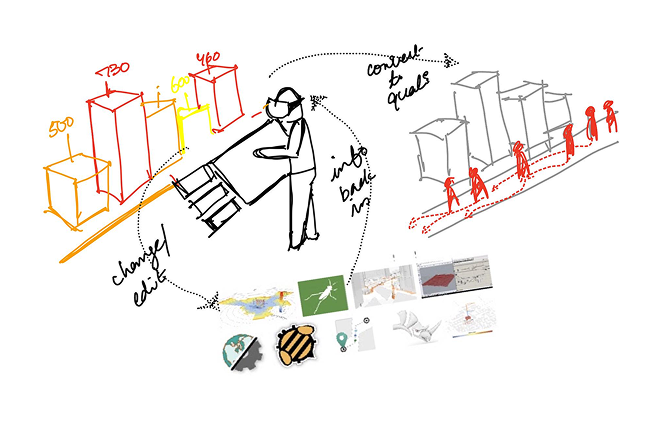

IDEATE

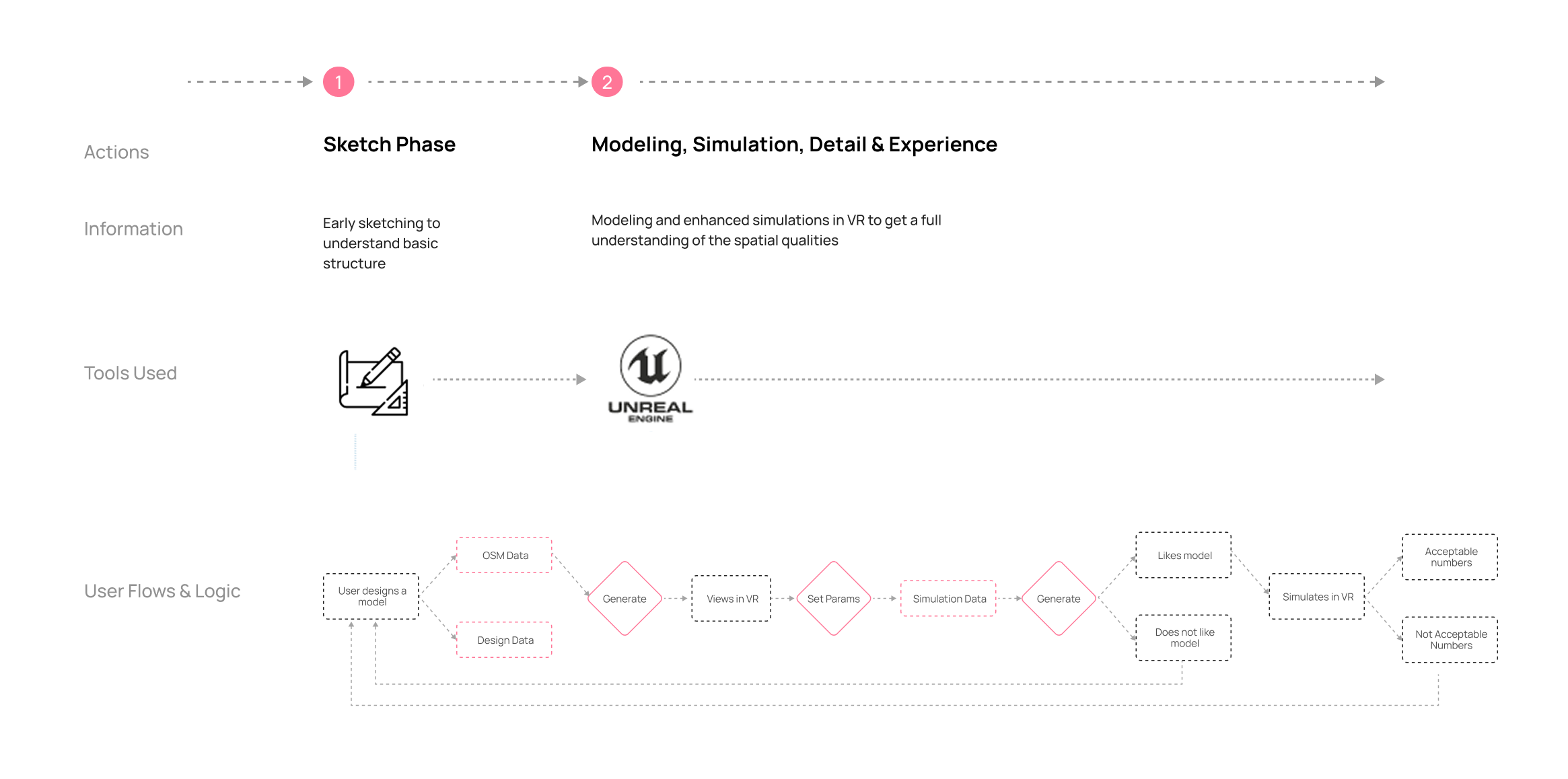

User Flows

To streamline the workflow and improve the user experience, we brought multiple design and simulation steps into a single immersive VR environment—enabling users to work end-to-end with greater clarity, speed, and spatial understanding.

IDEATE

Information Flow

We integrated data from multiple tools into a two-way pipeline, allowing users to stay within a single immersive workspace without context-switching across platforms. This not only reduced friction but also enhanced the quality and clarity of each simulation within the VR environment.

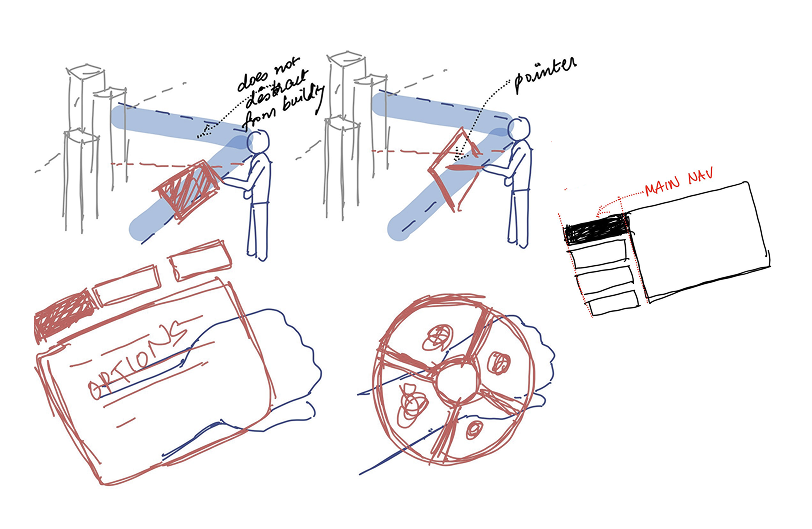

PROTOTYPE

XR Interactions

Single immersive workspace: Users stay in one unified VR environment, removing the need to switch between tools or platforms.

Enhanced spatial understanding: Each step—modeling, simulation, and analysis—is reimagined in VR to provide full-scale, intuitive experiences of data and design decisions.

UI Designed for Spatial Clarity

The interface was intentionally minimal and context-aware—positioned just above the user’s hand to remain accessible without interrupting immersion or distracting from the spatial qualities of the environment.

Color Choice: UI colors were selected to avoid clashing with architectural materials or simulation overlays, ensuring visual clarity.

Spatial Transparency: A subtle glass effect was used to maintain depth perception and spatial awareness.

Typography for VR: Fonts were chosen with increased letter spacing to counteract VR-specific issues like halation and maintain readability at all angles.

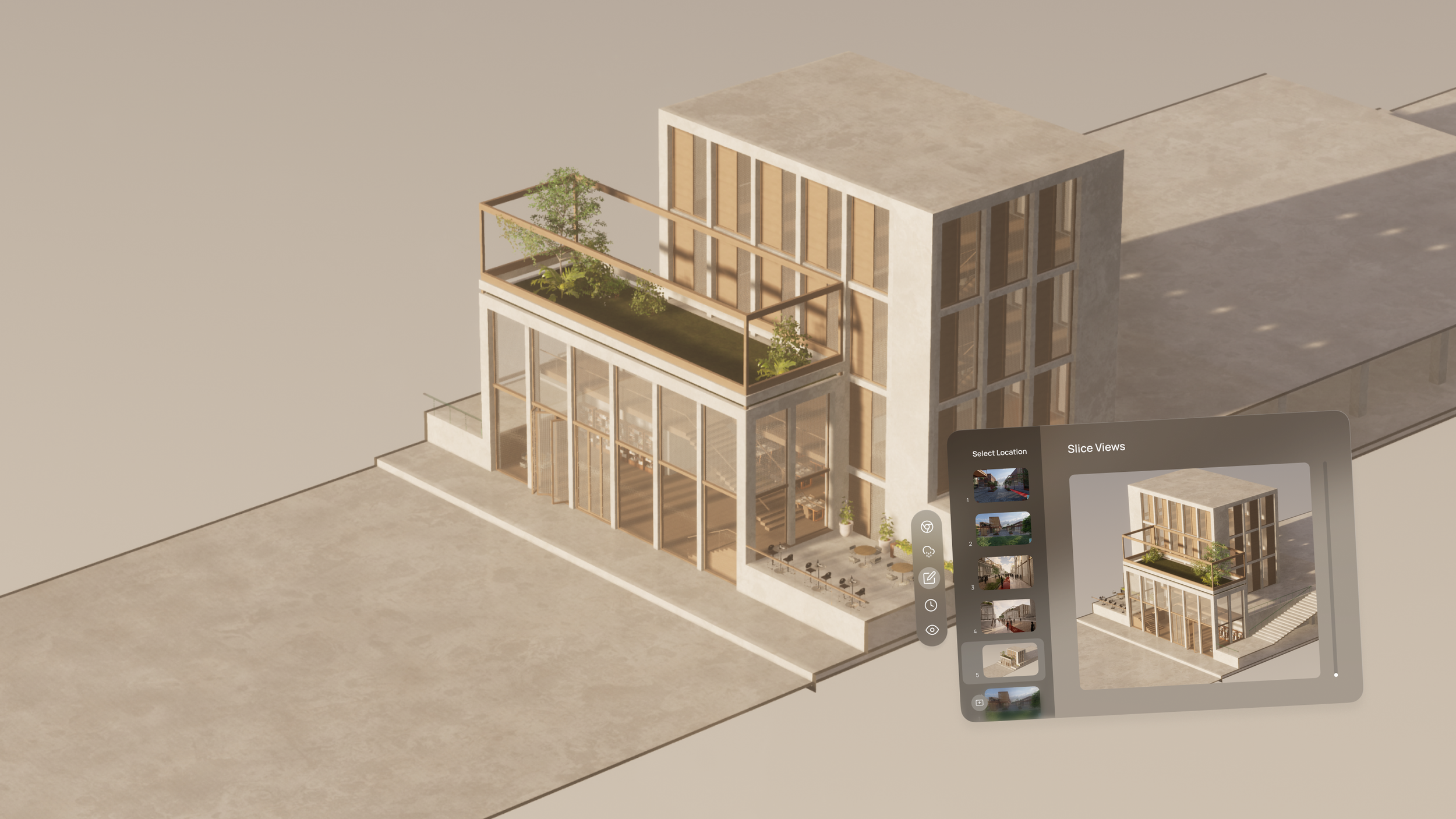

PROTOTYPE

Traditional vs Final Designs

Traditional simulation outputs—like crowd flow or energy usage—are typically displayed as flat, abstract 2D diagrams. These visuals require users to interpret complex spatial phenomena through color gradients, charts, or top-down views, which often leads to misjudgments. Without a sense of scale, movement, or physical presence, designers miss the intuitive understanding needed to make confident spatial decisions.

Merging Simulation and Design into a Single Spatial Workflow

I translated traditional simulation data directly into the VR environment, allowing users to see and feel the impact of simulations in real time. This integration combined two previously separate workflows—design and analysis—into one seamless, immersive experience.

Turning Data into Experience

I transformed abstract simulation outputs—like crowd density and circulation—into intuitive spatial cues, such as virtual people walking through the space. This allowed designers to feel the architecture in motion, making performance insights immediately understandable and emotionally resonant.

Real-Time Environmental Feedback, No Exports Required

Users can experience key environmental factors—like views, lighting, and weather conditions—directly within the VR space. This eliminates the need for constant exporting, enabling rapid iteration and more informed design decisions in context.

Inspect Sections in Full Scale, Not Just on Paper

Designers can easily create and view sectional cuts directly in VR, allowing them to walk through and examine spatial relationships at 1:1 scale. What was once confined to flat 2D drawings is now an interactive, immersive inspection tool.